Customer Services

Copyright © 2025 Desertcart Holdings Limited

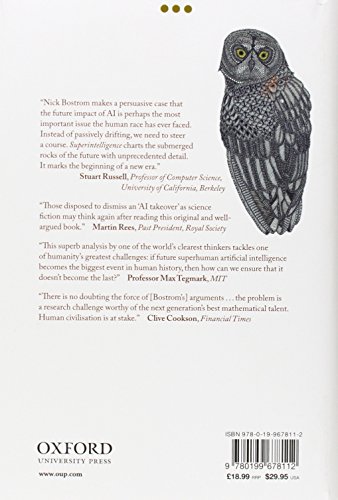

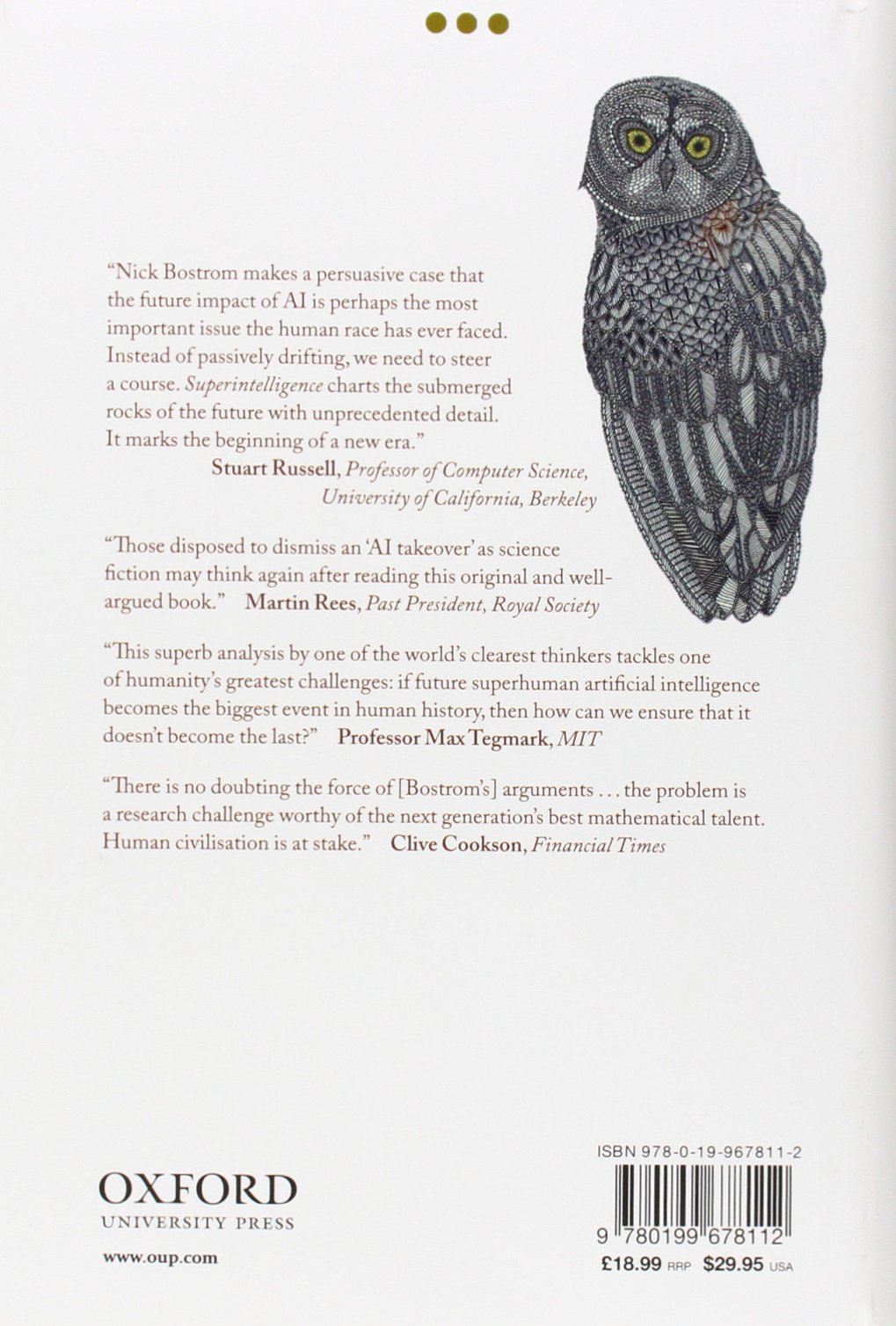

Superintelligence: Paths, Dangers, Strategies [Bostrom, Nick] on desertcart.com. *FREE* shipping on qualifying offers. Superintelligence: Paths, Dangers, Strategies Review: A good overview of issues in an age of AI - I picked this book up because I have a kid at CalTech majoring in AI programming, machine learning. He seems to see only upside, no real concerns about 99% of the population being put put of work, and what I believe is inadequate apprehension about what could go wrong. Mom is a huge fan of Stephen Hawking and he was more than a bit apprehensive about the potential problems with self-learning machines. Most of the books and articles I have read on the topic are cursory or naieve. Nick Bostrom's book is fairly comprehensive and in depth. I am enjoying it as much as an excellent read in philosophy of science., as I am for his expanding the boundaries of the conversation, indeed, broaching it in many areas. I honestly do not know whether he says everything which needs to be said, but he has clearly thought it through and done a good deal of exploring, consulting, conversing ,collaborating. It is far and away the best book I have read on the topic {though there are some good pieces in MIT Technology Review as well). This is a book which is important and timely. We must seriously consider and weigh the potential for harm as well as good before creating a monster. While there may be areas which he has missed, I feel that when I read about a brute force approach to building human level AI by recreating a brain at the quantum level using Schrodinger's equation, the man is clearly pushing the boundaries. If nothing else it is a very good start to an important conversation. I picked this up because I was considering sending a copy to my son, but read it first because he is a busy guy and chooses his side reading carefully. There are books and articles I might mention or even recommend, and others I tell him not to waste his time on, this is one I will be sending him {though I would be very very surprised if someone at Cal Tech did not broach ...all of what is contained here). I will let him determine if it is redundant. It is well written and thorough, and also very approachable. He says in the prologue that overly technical sections may be skipped without sacrificing any meaning. I have not encountered one I needed to skip, and have, in fact, very much enjoyed the level of discourse. Read it if you are in the field to make sure you are covering all the bases. Read it if you are a scientist, philosopher, engineer to enjoy some very good writing. Read it if you are just encountering AI and want to quickly get to speed on the issues. It is not only a book I would recommend, but have, to anyone who would listen ;) Review: Fundamental to current AI thinking - This was written 10 years ago, and formed the basis of a lot of thought about approaches to preparing for and dealing with an intelligence explosion. It’s extremely thorough, and goes through a series of philosophical and practical possibilities, and strategies for dealing with them (as the title says). It kind of bogs down at the 3/4 mark for modern readers, because realistically some of the questions he tackles are now moot (should we pursue whole brain emulation before synthetic AI? will the world band together to collaborate on a global AI?) and reading through all the paths and strategies has become less relevant. But he had a lot of interesting things to say on the side of collaboration that are definitely worth a re-read, and the general substance of the book is fundamental to understanding more current approaches to an intelligence explosion.

| Best Sellers Rank | #181,658 in Books ( See Top 100 in Books ) #3 in Artificial Intelligence (Books) #15 in Artificial Intelligence & Semantics |

| Customer Reviews | 4.3 4.3 out of 5 stars (4,777) |

| Dimensions | 9.3 x 1 x 6.2 inches |

| Edition | 1st |

| ISBN-10 | 0199678111 |

| ISBN-13 | 978-0199678112 |

| Item Weight | 1.48 pounds |

| Language | English |

| Print length | 352 pages |

| Publication date | September 3, 2014 |

| Publisher | Oxford University Press |

E**E

A good overview of issues in an age of AI

I picked this book up because I have a kid at CalTech majoring in AI programming, machine learning. He seems to see only upside, no real concerns about 99% of the population being put put of work, and what I believe is inadequate apprehension about what could go wrong. Mom is a huge fan of Stephen Hawking and he was more than a bit apprehensive about the potential problems with self-learning machines. Most of the books and articles I have read on the topic are cursory or naieve. Nick Bostrom's book is fairly comprehensive and in depth. I am enjoying it as much as an excellent read in philosophy of science., as I am for his expanding the boundaries of the conversation, indeed, broaching it in many areas. I honestly do not know whether he says everything which needs to be said, but he has clearly thought it through and done a good deal of exploring, consulting, conversing ,collaborating. It is far and away the best book I have read on the topic {though there are some good pieces in MIT Technology Review as well). This is a book which is important and timely. We must seriously consider and weigh the potential for harm as well as good before creating a monster. While there may be areas which he has missed, I feel that when I read about a brute force approach to building human level AI by recreating a brain at the quantum level using Schrodinger's equation, the man is clearly pushing the boundaries. If nothing else it is a very good start to an important conversation. I picked this up because I was considering sending a copy to my son, but read it first because he is a busy guy and chooses his side reading carefully. There are books and articles I might mention or even recommend, and others I tell him not to waste his time on, this is one I will be sending him {though I would be very very surprised if someone at Cal Tech did not broach ...all of what is contained here). I will let him determine if it is redundant. It is well written and thorough, and also very approachable. He says in the prologue that overly technical sections may be skipped without sacrificing any meaning. I have not encountered one I needed to skip, and have, in fact, very much enjoyed the level of discourse. Read it if you are in the field to make sure you are covering all the bases. Read it if you are a scientist, philosopher, engineer to enjoy some very good writing. Read it if you are just encountering AI and want to quickly get to speed on the issues. It is not only a book I would recommend, but have, to anyone who would listen ;)

R**B

Fundamental to current AI thinking

This was written 10 years ago, and formed the basis of a lot of thought about approaches to preparing for and dealing with an intelligence explosion. It’s extremely thorough, and goes through a series of philosophical and practical possibilities, and strategies for dealing with them (as the title says). It kind of bogs down at the 3/4 mark for modern readers, because realistically some of the questions he tackles are now moot (should we pursue whole brain emulation before synthetic AI? will the world band together to collaborate on a global AI?) and reading through all the paths and strategies has become less relevant. But he had a lot of interesting things to say on the side of collaboration that are definitely worth a re-read, and the general substance of the book is fundamental to understanding more current approaches to an intelligence explosion.

J**R

Interesting for anyone, but a must-read for all AI researchers

The author has obviously put a huge amount of thought into this topic. The number of angles he considers in terms of implementation timelines, methodologies, pros and cons for each, likelihood of the success of different methodologies over various timeframes, are impressive. For example, in discussing the various ways in which AI might be implemented, he concludes that AI (and subsequently, super-intelligent AI) via whole brain emulation is essentially guaranteed to happen due to ever-improving scanning techniques such as MRI or electron microscopy, ever-increasing computing power, and the fact that understanding the brain is not necessary to emulate the brain. Rather, once you can scan it in enough detail, and you have enough hardware to simulate it, it can be done even if the overarching design is a black box to you (individual neurons or clusters of neurons can already be simulated, but we lack the computing power to simulate 10 billion neurons, and we lack the knowledge of how they are all connected in a human brain -- something which various scanning projects are already tackling). However, he also concludes that due to the time it will take to achieve the necessary advances in scanning and hardware, whole brain emulation is unlikely to be how advanced AI is actually, or initially, achieved. Rather, more conventional AI programming techniques, while perhaps posing a greater need for understanding the nature of intelligence, have a much-reduced hardware requirement (and no scanning requirement) and are likely to reach fruition first. This is just one example. He slices and dices these issues more ways than you can imagine, coming to what is, in the end, a fairly simple conclusion (if I may inelegantly paraphrase): Super-intelligent AI is coming. It might be in 10 years, maybe 20, maybe 50, but it is coming. And, it is potentially quite dangerous because, by definition, it is smarter than you. So, if it wants to do you harm, it will and there will be very little you can do about it. Therefore, by the time super-intelligent AI is possible, we better know not just how to make a super-intelligent AI, but a super-intelligent AI which shares human values and morals (or perhaps embodies human values and morals as we wish they were, since as he points out, we certainly would not want to use some peoples' values and morals as a template for an AI, and it may be hard to even agree on some such philosophical issues across widely-divergent cultures and beliefs). This is a thought-provoking book. It raises issues that I never even would have thought of had the author not pointed them out. For example, "infrastructure proliferation" is a bizarre, yet presumably possible, way in which a super-intelligent (but in some ways, lacking common sense) AI could end life as we know it without even being malicious -- just indifferent to us while pursuing pedestrian goals in what is, to it, a perfectly logical manner. I share the author's concerns. Human-level (much less super-intelligent) AI seems far away. So, why worry about the consequences right now? There will be plenty of time to deal with such issues as the ability to program strong AI gets closer. Right? Maybe, maybe not. As the author also describes in detail, there are many scenarios (perhaps the most likely ones) where one day you don't have AI, and the next you do (e.g., only a single algorithm tweak was keeping the system from being intelligent and with that solved, all of the sudden your program is smarter than you -- and able to recursively improve itself so that days, or maybe hours or minutes later, it is WAY smarter than you). I hope AI researchers take heed of this book. If the ability to program goals, values, morals and common sense into a computer is not developed in parallel with the ability to create programs that dispassionately "think" at a very high level, we could have a very big problem on our hands.

I**Y

An interesting and thought provoking book

R**U

I highly recommend this book to anyone who wants to learn more about the future of AI and its impact on humanity1. The author, Nick Bostrom, is a leading expert on AI safety and ethics. He explores the possible scenarios, challenges, and solutions for creating and controlling AI systems that are smarter than humans. He also provides a clear and systematic framework for thinking and talking about the complex issues that AI raises2. This book is not only informative, but also engaging and thought-provoking. It challenges us to think ahead and act responsibly to ensure that AI is used for good and not evil. This book is a must-read for policymakers, researchers, and anyone who cares about the fate of our civilization.

H**O

Excelente libro para conocer sobre los principios de la IA

D**A

Es tal como quería, es de tapa blanda y muy cómodo de llevar.

R**S

Very difficult to get through and very pretentious. Honestly I didn’t get the rave review.

Trustpilot

2 months ago

3 weeks ago